For the last several months I’ve noticed what appears to be a greater than normal number of patient test results where the VA volume from the DLCO test was greater than the TLC. This is not impossible of course, but it usually tends to be more on the rare side and when I’ve seen this in the past and inspected the results closely there were usually either problems with the lung volume test or the difference was only a few percent and within the error bar for both tests. We’ve been seeing VA’s that were larger than TLC more frequently lately but when I look at the results closely most of the time I have been unable to see anything wrong with either the lung volume or the DLCO test. At the same time, we have a number of patients that are frequent fliers and have seen what looks to be bigger differences in DLCO from visit to visit than usual as well as a number of patients that have had larger DLCO results than we would have expected.

The problem is that these apparent problems are really just suspicions with very little real evidence. I’ve been paying very close attention to lung volumes since our hardware and software upgrade last summer so my paranoia level is on the high side and I may well be overreacting. Late last week however, I found myself on the horns of a dilemma. The test results for a patient with a helium dilution TLC that was 68% of predicted but at the same time with a VA that was 93% of predicted and a DLCO that was 129% of predicted came across my desk.

I inspected the DLCO and lung volume results with a fine-toothed comb and could find nothing wrong with the selected test results. Just to make this more difficult however, the patient had a lot of difficulty performing the pulmonary function tests, and although each of the reported spirometry, lung volume and DLCO results appeared to have adequate test quality, they were not in any way reproducible.

Usually when there is a problem with helium dilution lung volume tests, FRC tends to be overestimated. About the only time it isn’t is if the test is terminated too soon and in this case there was an essentially flat helium tracing for at least the last minute of testing so it was not. With DLCO tests the most common problem and the one that can affect VA and DLCO the most is an inadequate inspired volume, but in this case the FVC from spirometry, SVC from lung volumes and the inspired volume from the DLCO were almost identical. In the end I had to prevaricate and point to the lack of reproducibility and say that the contradictory results may be due to suboptimal test quality and that the TLC may be underestimated and the DLCO may be overestimated.

In this particular case I am more inclined to believe the lung volume results than the DLCO results in part because the DLCO of 129% of predicted didn’t fit the patient’s clinical picture all that well. This got me thinking about what would affect both the VA and the DLCO at the same time. VA and DLCO are intimately interconnected and for a given rate of CO uptake, DLCO scales with VA. VA is calculated from the inspired volume and the change in the insoluble component of the DLCO test gas, in this case methane.

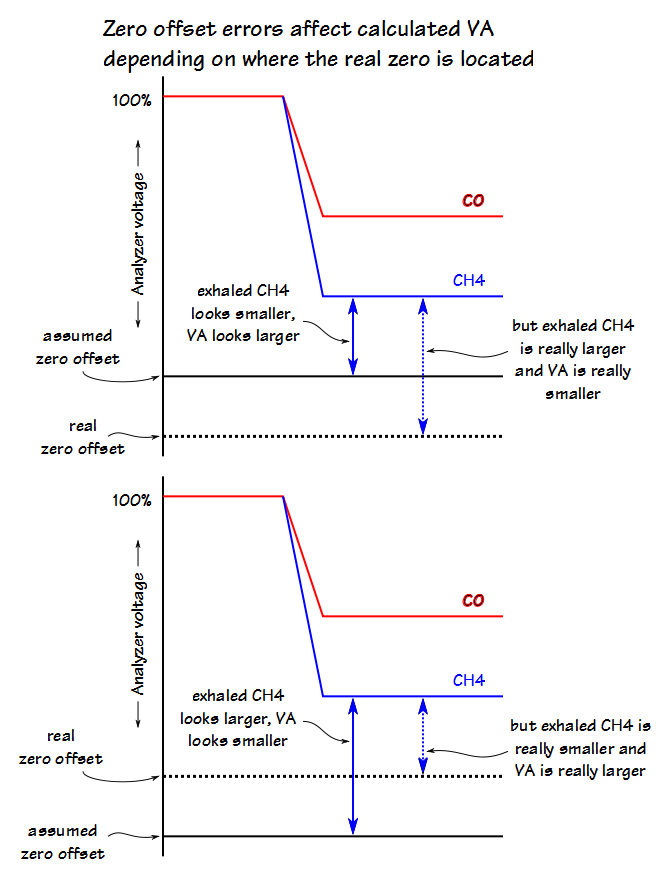

![]() But, this calculation depends on the accuracy of the gas analyzer, or more specifically that the analyzer’s zero is accurate and the analyzer output is linear. The analyzer’s specifications claim that it is linear to 1% over the full scale of the analyzer and I’ll have to take that at its word particularly since I have no way to verify it. Linearity is not a given, by the way. Back in the 1970’s the output of the MSA CO analyzer was a curve and I had to use a graph of the analyzer’s calibration curve to convert meter readings to actual percent CO when calculating DLCO test results.

But, this calculation depends on the accuracy of the gas analyzer, or more specifically that the analyzer’s zero is accurate and the analyzer output is linear. The analyzer’s specifications claim that it is linear to 1% over the full scale of the analyzer and I’ll have to take that at its word particularly since I have no way to verify it. Linearity is not a given, by the way. Back in the 1970’s the output of the MSA CO analyzer was a curve and I had to use a graph of the analyzer’s calibration curve to convert meter readings to actual percent CO when calculating DLCO test results.

The zero offset, on the other hand, is checked and logged with every calibration and we calibrate our analyzers daily. Interestingly, when I reviewed the CO and CH4 zero offsets I found that although most systems had reasonably stable zero offsets there was one system that had a highly variable CO zero offset and one system that had a highly variable CH4 offset. Despite the day to day variability in these offsets, no alarms had been triggered because the zero offsets were all within the manufacturer’s normal range.

In one sense a variable zero offset is not necessarily a cause for alarm as long as the calibrated zero offset remains stable during the testing session. If the zero offset shifts however, and this shift is not accounted for, this can cause the concentration of the exhaled CO or CH4 to be either underestimated or overestimated.

This is where it begins to get interesting. By looking carefully at our test result database I was able to see that the analzyer’s zero offset and gain are not stored as part of the DLCO test record. What is stored is the fractional concentrations of exhaled CO and CH4 and these values are calculated from the analyzer’s output voltage using the zero offset and gain.

So where is the zero offset and gain for CO and CH4 stored? In the calibration records, of course.

Isn’t the analzyer calibrated before every DLCO test? Yes it is and a new calibration record is created every time too, but this is also where it gets very confusing because when I looked at these new calibration records every one of them is an exact copy of the initial daily calibration record.

So what does this mean? It could mean that a new zero offset and gain are created during the pre-test calibration and they are used to calculate the fractional exhaled concentrations of CO and CH4, but that these new values are not being written to the database and are instead discarded after being used. This doesn’t explain why the initial calibration record is copied, however.

It could mean that despite going through a pre-test calibration that the new calibration results are discarded before being used and the zero offset and gain from the initial calibration are used instead and then re-written to the database.

I have no idea which of these is correct. This may very well be a software error that only affects record-keeping. On the other hand, if the CO and CH4 zero offsets and gains are not being updated then this opens the door to DLCO calculations that are inaccurate because the exhaled CO and CH4 concentrations are being calculated using information that is out of date.

This information is, of course, not in the equipment manual. The manual doesn’t even mention the pre-test calibrations or anything whatsoever about how exhaled CO and CH4 concentrations are derived. I have passed a set of questions regarding this problem to our designated technical representative at the equipment’s manufacturer and will be interested at seeing what they have to say about this (and in seeing how long it takes to get an answer).

There is at least one other major question this investigation has brought up and that is why is the zero offset so highly variable on some analyzers and rock steady on others? Why is it that only one channel seems to be affected? The equipment manufacturer addresses zero offsets and gains that are in or out of range (although that begs the question of where these normal ranges come from) but not the degree of variability. Is this variability a function of the measurement process or the manufacturing process or the analog electronic components or is it an early sign of component failure? Is there anybody involved in the development or manufacture of the analyzer that even knows or is this just an accepted quirk?

All of our pulmonary function test equipment have become a black boxes. This is in large part a consequence of computers and computer software taking over the mechanical functions and calculations formerly performed by humans. We no longer know or have access to the information about how measurements are being made. Functions are buried in proprietary software and hardware, and we are asked to take the manufacturer’s word that the results are accurate. Please notice that I am not saying they’re not accurate since I know that manufacturers often go to great lengths to insure accuracy, just that we no longer have the ability to assess this for ourselves.

It is this inability to assess our equipment’s accuracy that bothers me the most. I see many research studies whose results come from pulmonary function or exercise test equipment. Significant physiological conclusions are drawn from these results, but the researchers are accepting as a given that the results are accurate without being able to verify this.

There are DLCO and exercise simulators that can be used for quality control but they are also expensive and it is difficult to convince hospital administration of the need for them. I know that I put an exercise simulator in my capital budget for several years but was turned down every time. Heck, I was turned down for capital budget money to replace aging equipment that hadn’t been supported by the manufacturer for years and was unrepairable so what chance did a simulator have? Even so I am not sure that a simulator would have made this particular problem any clearer. I know that my inclination would be to use a simulator immediately after performing a calibration and in this instance the more time that had elapsed since the last calibration the more likely the problem would be to have shown up. This is instead a situation where manufacturers need to be able to provide explicit information about how their equipment operates and how calculations are made but since this is usually considered to be proprietary information we’re not likely to get it anytime soon.

PFT Blog by Richard Johnston is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.