I’ve noticed for a while that there has often been more variance between DLCO tests than I’d like to see. Some of this is of course attributable to differences in the way the patient performs each test. I am not overly surprised to see tests with different inspired volumes, different breath-holding times, different inspiratory times etc. etc. produce different results (in fact I am surprised that so many tests that have been performed differently frequently end up with almost identical results).

All too often though, I see tests that look like they were performed identically and yet have noticeably different results. For this reason I have been paying attention to small details to see if I can understand why this variance has been happening. I am well aware that there are “hidden” factors such as airway pressure (Valsalva or Mueller maneuvers) and cardiac output that can affect pulmonary capillary blood volume and therefore the DLCO. It is quite possible that much of the test-to-test variation is a result of these kinds of factors but I’ve also found several test system software and hardware errors that have lead to differences as well.

I am annoyed to say that I’ve found what could either be another system error or possibly a patient physiological factor that can lead to mis-estimated DLCO results. I’m annoyed not because I found it but because I’ve been looking at the DLCO test waveforms for a long time and never noticed this problem before. Of course since I’ve noticed it I now see it frequently.

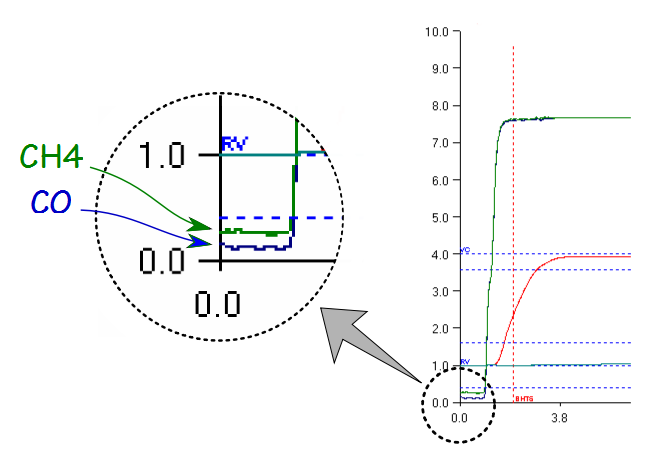

To understand this error you have to know that during the DLCO test the gas is sampled from a port just downstream of the patient mouthpiece. Just before the test has actually started the analyzer is sampling either room air or the patient’s exhaled air. As part of setting up for the DLCO test the CO & CH4 analyzer is checked with calibration gases but not actually calibrated. The “real” calibration occurs in a separate calibration module and is usually performed first thing in the morning. The CO and CH4 zero offsets and gains obtained from the “real” calibration are used in all subsequent calculations. At the beginning of the DLCO test the patient is supposed to exhale to RV and then inhale to TLC. The DLCO test waveforms include about a second’s worth of data from the gas analyzer just before the patient inhalation.

Since this part of the test waveform comes just before inhalation the CH4 and CO traces should be zero, yet as this example shows they are actually above it. This is not a large amount of offset, but for CH4 it actually works out to be about 3.5% of full scale. Assuming that this offset is real then for this test the exhaled CH4 would likely be overestimated by somewhere between 1.5 and 2.0 percent of what it should be, which means that VA and therefore DLCO would be underestimated by about the same amount. Realistically this is probably not enough to make a significant difference in the results but it bothers me because it shouldn’t be there in the first place.

So where is this offset coming from? I see two possibilities and they aren’t mutually exclusive.

First, since at this point in the test the gas analyzers are sampling the end of the patient’s exhalation, this could be residual gases from previous tests. In favor of this interpretation is the fact that the above example is from a patient’s third DLCO test. This patient’s first test showed no offset and the second test about half the offset seen here. Additionally, the CO waveform is offset less than the CH4 waveform which also makes sense since CO can be absorbed in the lung far more easily than CH4.

What goes against this interpretation is that the offsets are usually a lot more random and often don’t follow test order. I have seen noticeable offsets in CH4 and CO in a patient’s first test and no offsets in their second or third tests. I’ve also see tests where the analyzer traces did not appear at all and when I looked at the numerical data it was because they were negative values. As I mentioned, the analyzer’s zero offsets are often obtained many hours before a given test and I’ve yet to see an analyzer that didn’t drift at least a bit so is quite possible the offset is due to analyzer drift.

As I said, these two interpretations are not mutually exclusive. Depending on how long an interval there is between DLCO test there may well be some residual CH4 and CO in the patient’s exhaled air. I have previously advocated that a patient’s exhaled CO level should be checked before each DLCO test since elevated PACO levels will decrease the measured DLCO. I hadn’t thought about CH4, but if CO is being retained between tests then of course so will CH4.

Since analyzer drift is probably never going to go away, I do not understand why the analyzer isn’t re-calibrated for every test. The calibration and pre-test analyzer check procedures go through exactly the same steps and take exactly the same length of time. There may well be a valid reason why it isn’t routinely re-calibrated, but so far nobody has been able to explain this to me and it is not documented anywhere either.

[The lack of documentation is a point that bothers me a lot. The only reason I even know that there is a difference between a “real” calibration and a pre-test analyzer check is from inspecting the database and getting my suspicions confirmed in a face-to-face conversation with one of the engineers that developed the software. I’ve found a number of small details in the DLCO and other tests that are not documented yet have the potential to have a significant effect on the results. I am concerned about this because I see research papers where the investigators say they did a such-and-such test using equipment from the so-and-so manufacturer yet nowhere do I see that they made any attempt to determine whether the equipment is actually producing accurate results. I am not necessarily asking that manufacturers openly publish the proprietary code for their test systems but maybe it’s time for the ATS-ERS to not just establish the procedural standards for tests but the computer algorithms for them as well.]

I have verified that this error is “real” at least in the sense that the values displayed in the DLCO test waveforms are matched by the numerical data. Since the numerical data has already been processed for the calibrated zero and gain this reflects either a signal from the patient’s residual gases or from analyzer drift. It’s not a big error and it’s unlikely to make a significant difference in the reported results, but many (many, many, many) years ago when I was a Cub Scout and we were packing our knapsacks for hikes we were told to “mind the ounces and the pounds would mind themselves”. The same principal applies here. Even though they are small each detail matters and enough of them acting together can end up reducing test results to garbage.

PFT Blog by Richard Johnston is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.