I like to think my lab is better than most but every so often something comes along that makes me realize I’m probably only fooling my self.

Earlier this week I was reviewing the DLCO test data for a patient with interstitial lung disease. At first glance the spirometry and DLCO results pretty much matched the diagnosis and I had already seen they weren’t significantly different from the last visit. The technician had written “fair DLCO reproducibility” which was reason enough to review the test data but I’ve actually been making a point of taking a careful look at all DLCO tests, not just the questionable ones, for the last couple of weeks. I took one look at the test data, put my head in my hands, and counted to ten before continuing.

| Reported: | %Predicted: | Test #1: | Test #2: | Test #3: | |

| DLCO: | 13.22 | 66% | 10.08 | 92.17 | 16.36 |

| Vinsp (L): | 2.17 | 2.20 | 2.15 | ||

| VA (L): | 3.45 | 66% | 2.89 | 2.93 | 4.02 |

| DL/VA: | 3.78 | 91% | 3.49 | 31.5 | 4.07 |

| CH4: | 60.84 | 60.94 | 43.15 | ||

| CO: | 34.46 | 0.51 | 23.13 |

Even though the averaged DLCO results were similar to the last visit, the two tests they were averaged from were quite different. Reproducibility was not fair, it was poor. But far more than that, something was seriously wrong with the second test and the technician hadn’t told anybody that they’d had problems with the test system. {SIGH}. It’s awful hard to fix a problem when you don’t even know there is one in the first place.

I usually review reports in the morning the day after the tests have been performed, so the patient was long gone by the time I saw the results. This left me with a problem that I’m sure we’ve all had at one time or another and that was whether any of the DLCO results were reportable.

Towards this end the first thing I looked at was how well the patient had performed the tests. What I saw was that the inspired volumes ranged from 96% to 98% of the FVC and the BHT time ranged from 10.5 to 11.4 seconds, both of which are well within the ATS/ERS criteria. A look at the volume-time graphs showed a rapid inspiration, rapid expiration, no glitches during the breath-holding period and the expiratory sample was positioned within the alveolar plateau correctly. So mechanically at least, all of the DLCO maneuvers had been performed with good quality.

The real differences between the tests were in the baseline and exhaled gas concentrations. For this reason test #2 can pretty much be discounted immediately (and not just because the measured DLCO was 697% of predicted!). Our lab software reports the exhaled CO and CH4 as a percent of the inhaled concentration. An exhaled CO concentration of 0.51% is more than somewhat unlikely physiologically speaking (unless the breath hold period had been a minute or so but not for one that was 10 seconds long) and exhaled CO is usually somewhere between 20% and 60%. The reason for the low CO reading became more apparent when I looked at the baseline CH4 and CO.

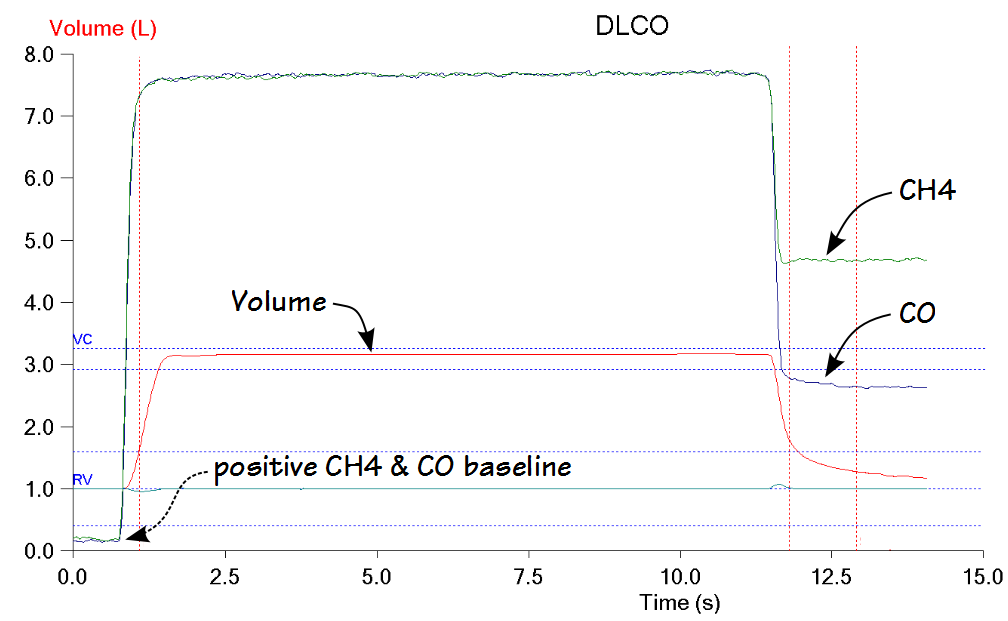

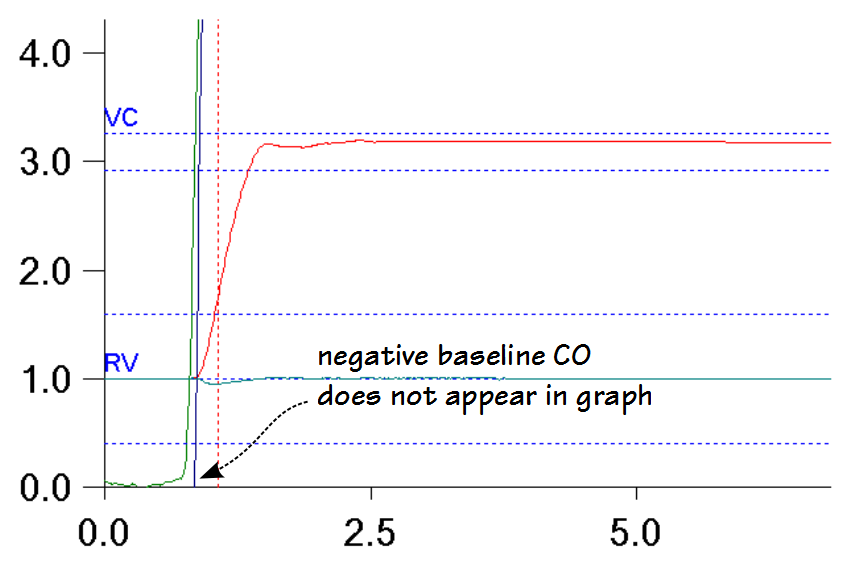

I need to digress for a moment here and explain that our lab software does not report the baseline CH4 and CO readings, other than as the first second of the DLCO graph. If the CH4 and CO analyzer readings have a positive baseline (> 0.0) then their traces will be slightly elevated on the graph.

If the baseline CH4 or CO is negative however, they don’t appear in the graph at all and there is no way to tell how negative they are.

It’s possible to download the raw data from the DLCO test into a spreadsheet but since none of the computers attached to our test systems have spreadsheet software it can only be done from one of our desktop workstations. In addition the CO and CH4 are reported on the spreadsheet as fractional concentrations, and not as a percent of the inspired concentrations. It’s not real hard to convert back and forth, but is another step and all this tends to means that the baseline CH4 and CO aren’t easily available.

Anyway, the baseline CH4 and CO values should be close to zero and for the CH4 reading of 0.001 (+0.35%) this was true. The baseline CO reading however, was -0.194 (-63.9%) which means that for some reason the zero offset of the analyzer was way off and this in turn was causing the exhaled CO reading to be reduced as well.

Since our test systems go through what looks like a calibration before each DLCO test how could this happen? This is not documented (or at least not at all clearly) in the equipment’s manual (and I found this out only by talking to the software engineers several years ago) but the pre-test “calibration” only checks the gain of the CH4/CO analyzer and that is only so it can set a gain factor used when calculating the output from the analyzer. Even though the system samples both room air and the inhaled gas mixture during this step, as far as I can tell neither the gain or the zero of the analyzer are checked against a “real” calibration (which is usually performed first thing each morning).

So the DLCO from test #2 isn’t any good but how about the other two tests? For both test #1 and test #3 the baseline CH4 and CO were within normal limits. The difference between the two tests is entirely in the exhaled CH4 and CO. The lower CH4 in test #3 leads to a higher calculated VA and to some extent this is hard to explain physiologically because the inspired volumes from test #1 and test #3 were essentially identical. Interestingly, the exhaled CH4 and VA from test #2 match those of test #1. Admittedly the CO readings from test #2 were off but the baseline CH4 was normal and there is no reason otherwise to believe that the CH4 readings from test #2 are inaccurate.

The problem with using VA this way is that the patient has never has lung volume measurements before, only spirometry and DLCO, so there is no TLC for comparison. The VA from the last two visits however, was 2.72 L and 2.91 L and this looks a lot more like the VA from test #1 and test #2 than it does from test #3 and this makes test #3 questionable as well.

Based on all of the above this leaves test #1 with the least number of reasons to believe it is inaccurate. Except that when compared to the last two visits it is lower by approximately 3 ml/min/mmHg (-23%) and there has been essentially no change in spirometry in the meantime. Given the nature of interstitial disease this certainly isn’t all that unlikely but it at least leaves some doubt about test #1 as well, but it’s the least amount of doubt for any of the tests.

At this point I felt I had no choice but to select test #1 to be reported. I did equivocate though by also noting that the DLCO reproducibility was poor and test quality was questionable.

This situation brings a number of problems to light.

- Given the DLCO of 697% of predicted the tech should have (if possible) moved the patient to a different test system following test #2. At the very least, the DLCO gas analyzer should have been re-calibrated before test #3 was performed.

- The tech should have reported the problems with the test system.

- The tech should have written “poor reproducibility”, not “fair reproducibility” (which is admittedly a minor point but demonstrates a lack of understanding about what constitutes reproducibility).

- The test system should go through a real calibration before each DLCO test, and not just check the gain of the analyzers. At the very least it should compare the zero and gain of the gas analyzer against the last calibration and flag any discrepancies (which is also probably a good idea for the real calibrations).

- The test system software should report the baseline zero for CH4 and CO as part of the test results.

For the first three items I will need to check the lab’s written DLCO procedure and make sure it addresses these issues and update it if necessary. Regardless of what is or isn’t in the written procedure I will also need to put together an email for all the techs that will either remind or notify them of these problems (and hope they remember it in the future). I could wish that the lab staff were more technically inclined and better able to detect these kind of problems with testing but this doesn’t seem to be a common aptitude any more (if it ever was).

As far as the last two items go, I notified the manufacturer of our equipment about these issues and other similar ones over three years ago. We went through a software update three months ago and these problems are still there so I guess it wasn’t a priority for them.

To be honest, problems like this a fairly rare. I’ve been reviewing every DLCO test for the last three weeks (I’ve always reviewed the questionable ones and I get called in to assess results while the patient is still there reasonably often) and the majority of them have results that are either highly repeatable or where the differences can be explained by problems with the test maneuver. When DLCO tests go wrong however, it can be difficult to determine what, if anything, can be salvaged, particularly when you see the results the day after tests were performed.

One thing that continues to bother me is that we are kept at a distance from the hardware in our test systems by the computer software. I strongly suspect that the baseline error in the CO analyzer for test #2 is due to a computer error of some kind. Gas analyzers contain analog components and their zeros and gains do drift over time, but not that much and not in such a short time. The tests were performed at 3:09 PM, 3:16 PM and 3:21 PM, and it is unlikely that the zero baseline (and not the gain as well) for CO would start out normal, change drastically and then return to normal (!!) over the span of 12 minutes. But the gas analyzer is a sealed box buried inside the test system and there aren’t any readouts. There is no way to verify the gas analyzer’s operation other than through our test system software and even though I can download the raw data the zero and gain have already been corrected from the original analog signals by the software.

The ATS/ERS wrote technical standards for the accuracy and precision of test systems over 10 years ago but they are mostly based on technology that is 10 to 20 years older than that. What I’d like to see is that the next set of ATS/ERS standards not only address current technology and practices but include a mandate that all PFT test systems include a real-time “diagnostic” mode that allows direct access to the “real” raw data (voltages and the states of valves and switches) from all the system components (similar to the OBD2 interface in all cars and trucks). I’ll be the first to admit that most labs wouldn’t make much use of this (and even then probably mostly by a hospital’s clinical engineering department or the manufacturer’s service techs) but it would make it possible for technologists and researchers to verify that a test system is operating correctly and accurately.

PFT Blog by Richard Johnston is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License

Do you have one of the DLCO test systems developed (and sold, at least a few years ago) By Crapo and his partner Bob Jensen in SLC? I had one, and loaned it to other labs to check their systems. As you are aware, it uses a few different mixes, usually approximating what is seen in exhaled gases in the clinical world.

Out of curiosity, I’ve responded a few times to your posts, and I think we probably know a few of the same people, eg Kevin McCarthy, but you’ve always not posted or deleted my responses. Any reason why?

As to your current post, I think in the first part of the second paragraph you pretty much answered your issue; it was approx. the same as previous visit, you had one outlier, explained with your rational. Glitches we’ve all seen in our time.

Who’s your equipment vendor? Morgan? I wasn’t sure I recognized the graph, but I’ve been retired 6 years.

Cheers,

Mike Mulligan

Mike –

You are referring to the DLCO simulator, patent #6415642, that is currently being sold by Hans Rudolph. I blogged about this device several years ago. We use it for quality control and it works reasonably well but I’ve found the DLCO results from this device aren’t perfectly reproducible, with a repeatablity of around 3-5%. The reasons for this are unclear (not sure if it’s our gas analyzer or whether room air gas is getting in the connecting tubing) but this is better than biological QC. FYI, we are an Nspire lab. I’ve worked with Collins equipment throughout my career. The problem with the results being the same as last time was that this was only due to averaging two quite different results. During the last several visits the patient had good repeatability so this doesn’t quite jibe.

Mike, you’ve commented three times, once to Blog Author, once to my blog on the FEV1/SVC ratio, and this one, your latest. I have responded each time and have even emailed you (although I never got a reply). I have not knowingly deleted any of your comments. It’s possible they ended up in the system spam folder, but I check the spam folder and have never found any comments from you (and for that matter I’ve never found a comment in the spam folder that wasn’t spam).

Regards, Richard